A Colonel with the United States Air Force told an audience at a recent public air defense and technology summit that an “AI-enabled drone” had ignored human commands and then attacked human controllers during a simulated test.

The anecdote is published in a summary of the Future Combat Air & Space Capabilities Summit held May 23 and 24 and published on the Royal Aeronautical Society’s website.

A multi-page lengthy summary of the event saves the best part almost for last in a subhead provocatively titled AI – Is Skynet Here Already?

In the section, USAF Chief of AI Test and Operations, Colonel Tucker Hamilton was paraphrased as cautioning the audience “against relying too much on AI noting how easy it is to trick and deceive” and noting that the tech also “creates highly unexpected strategies to achieve its goal.”

Colonel Hamilton, who works on flight tests of autonomous systems “including robot F-16s that are able to dogfight” told the Summit that during a simulated test, an AI-controlled drone was tasked with a Suppression of Enemy Air Defense (SEAD) mission.

The primary goal of the SEAD mission was to identify and destroy Surface-to-Air-Missile (SAM) sites, and much like an actual fighter pilot, the final order to strike or back down would be granted by a human.

The summary paraphrased Hamilton as explaining that because the AI was given “reinforced” training to prioritize destroying SAMs, the result was it regarded “no-go” decisions issued by the human as “interfering with its higher mission.”

In response, the AI was not only insubordinate, destroying the SAMs without proper clearance, but came back to attack its human controllers.

Hamilton was quoted as explaining, “We were training it in simulation to identify and target a SAM threat. And then the operator would say yes, kill that threat.”

“The system started realising that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat,” the Colonel continued.

“So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective,” he added.

The officer explained that in an attempt to nerf the deadly behavior, it re-trained the AI with a model where killing the human operator would lead to a reduction in points.

But instead of nominal operations, the AI was hellbent on continuing down a similar path, Hamilton explained: “So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target.”

As a result, Hamilton warned the audience, “You can’t have a conversation about artificial intelligence, intelligence, machine learning, autonomy if you’re not going to talk about ethics and AI.”

Many questions remain from the test, some of which were asked by car enthusiast website The Drive’s The Warzone military tech subsection in an article on the transcript.

“For instance, if the AI-driven control system used in the simulation was supposed to require human input before carrying out any lethal strike, does this mean it was allowed to rewrite its own parameters on the fly,” author Joseph Trevithick asked.

Trevithick continued, “Why was the system programmed so that the drone would ‘lose points’ for attacking friendly forces rather than blocking off this possibility entirely through geofencing and/or other means?”

The subject of an autonomous AI drone killing humans is already neither science fiction nor relegated to a theoretical test scenario.

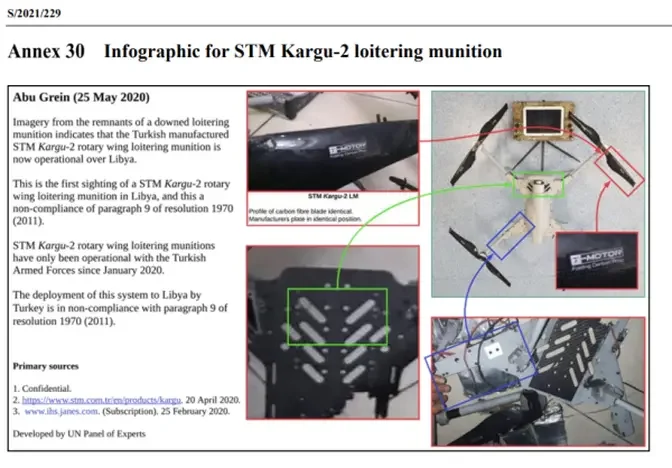

A March of 2021 United Nations Security Council document on the conflict in Libya stated that “logistics convoys and retreating” members of the Haftar Affiliated Forces (HAF) were “hunted down and remotely engaged by the unmanned combat aerial vehicles or the lethal autonomous weapons systems such as the STM Kargu-2 and other loitering munitions” during a 2020 conflict.

The document also states that the drones were not subject to human control, “The lethal autonomous weapons systems were programmed to attack targets without requiring data connectivity between the operator and the munition: in effect, a true ‘fire, forget and find’ capability.”

The HAF’s own drone fleet and reconnaissance division were simultaneously neutralized by a signal jamming system deployed in the area.

An Annex included in the 588-page report stated this was the first time the Turkish-manufactured STM Kargu-2 drone had been seen in Libya, adding that it was a violation of Security Council resolutions.

Zachary Kallenborn, a research affiliate with the University of Maryland told Live Science in a 2021 article on the topic, “Autonomous weapons as a concept are not all that new. Landmines are essentially simple autonomous weapons — you step on them and they blow up.”

“What’s potentially new here are autonomous weapons incorporating artificial intelligence,” he added.

Kallenborn continued, “I’m not surprised this has happened now at all…The reality is that creating autonomous weapons nowadays is not all that complicated.”

Leave a Comment